There are several reasons why you might want to reindex all index documents. This includes changes in the Search Engine configuration how text gets indexed (for example to activate certain features such as stemming) and changes in configuration or code so that different data is sent to the Search Engine. In any case, reindexing a whole index is a very expensive operation and takes some time.

Reindexing Elastic Social indices

Elastic Social indices can be reindexed by invoking the JMX operation

reindex of interface

com.coremedia.elastic.core.api.search.management.SearchServiceManager of an

Elastic Social application.

You can find the SearchServiceManager MBean

of the elastic-worker web application for tenant media under the object name

com.coremedia:application=elastic-worker,type=searchServiceManager,tenant=media.

The operation takes the name of the index without prefix and tenant as parameter. For example, to reindex the

Solr core blueprint_media_users the operation has to be invoked with the parameter

users. It then clears the index and reindexes every index document afterwards.

Reindexing Content Feeder and CAE Feeder indices

The most simple approach for Content Feeder and CAE Feeder indices is to clear the existing index and restart the Feeder. The Feeder will then reindex everything from scratch. In almost all cases this is not what you want because search will be unavailable (or only return partial results) until reindexing has completed. See the section called “Clear Search Engine index” and Section 5.3.2, “Resetting” for instructions how to clear an existing index for Content Feeder and CAE Feeder, respectively.

A better solution is to feed a new index from scratch but keep using the old one for search until the new index is up to date. Applications can use the new index when reindexing is complete. When everything is fine, the old index can be deleted afterwards. This approach does not only have the advantage of avoiding search downtime but makes it also possible to test changes before enabling the index for all search applications.

To prepare a new index, you need to set up an additional Feeder and configure it to feed the new index. The new Feeder instance will eventually replace the existing Feeder instance.

You can follow these steps to reindex from scratch:

Add a new Solr core for the new index. The Solr Admin UI supports adding Solr cores in general but currently still lacks support for named config sets (SOLR-6728), so you have to create the new core with a HTTP request. To this end, you just need to send a request to the following URL with correct parameters, for example by opening it in your browser.

http://<hostname>:<port>/solr/admin/cores?action=CREATE&name=<name>&instanceDir=cores/<name>&configSet=<configSet>&dataDir=dataReplace

<hostname>and<port>with host name and port of the servlet container that runs the Apache Solr master.Replace

<name>with the name of the new core. Mind that it appears twice in the above URL. You can choose any name you like as long as no such core and no such directory below<solr-home>/coresexists yet. If you are using Elastic Social you should also avoid names that start with the configuredelastic.solr.indexPrefixfollowed by an underscore (for example,blueprint_) to avoid name collisions with automatically created Solr cores.Replace

<configSet>with the name of the config set of the new core. This should becontentfor Content Feeder indices andcaefor CAE Feeder indices. Alternatively you can set it to the name of a custom config set, if you are using differently named config sets in your project.

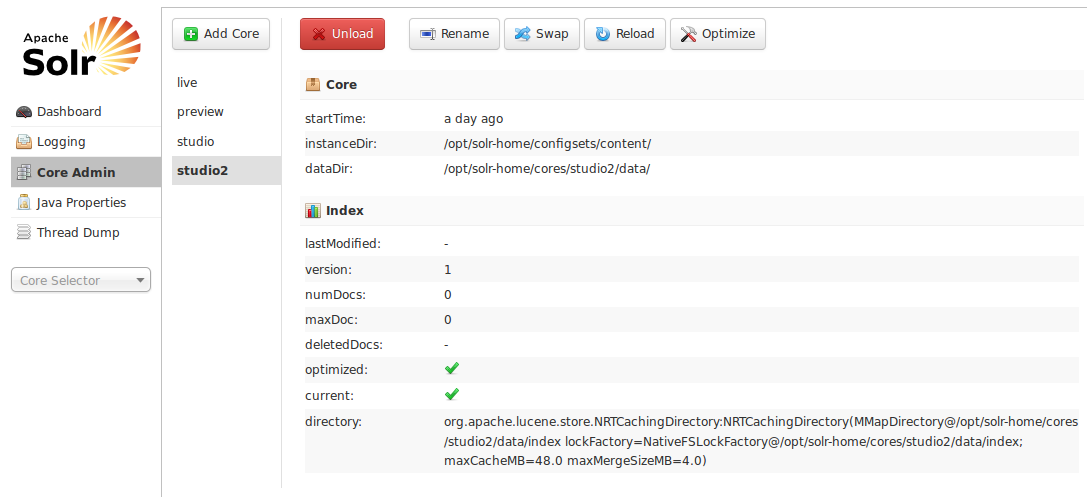

Check that the new core was successfully created in the directory

<solr-home>/cores. There should be a new subdirectory with the name of the newly created core which contains acore.propertiesfile. For example, if a corestudio2with config setcontentwas created, then<solr-home>/cores/studio2/core.propertiesshould contain something like:#Written by CorePropertiesLocator #Thu Dec 11 17:16:47 CET 2014 name=studio2 dataDir=data configSet=content

You can also open the Solr Admin UI at

http://<hostname>:<port>/solr, which shows the newly created core on the page:Set up a new Feeder instance and configure it to feed into the new Solr core by setting the property

feeder.solr.urlaccordingly. Do not change the propertyfeeder.solr.collection.For example, to configure a newly set up Content Feeder to feed into the new core with name

studio2, set inWEB-INF/application.properties:feeder.solr.url=http://localhost:44080/solr/studio2 feeder.solr.collection=studio

In case of a CAE Feeder, you must also configure it with a separate empty database schema.

Start the new Feeder and wait until the new index is up-to-date, for example by checking the log files or searching for a recent document change in the new index. Depending on the size of the content repository this may take some time.

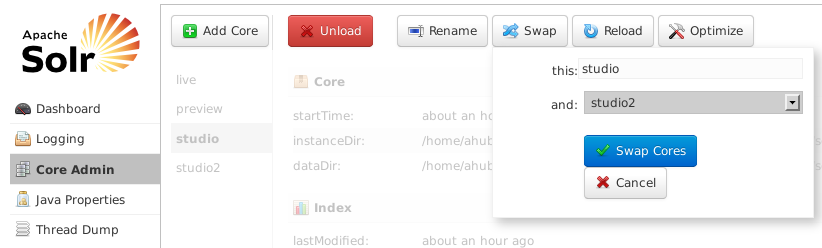

Stop the Feeders for both the old and new Solr core.

To activate the new index, it's now time to swap the cores so that the new core replaces the existing one. You can swap cores with the [SWAP] button on the page of the Solr Admin UI. Afterwards, all search applications automatically use the new core, which is now available under the original core name.

It's important to understand that this operation does not change the directory structure in

<solr-home>/coresbut just thenameproperty in the respectivecore.propertiesfiles. For the example of swapping coresstudioandstudio2, you now have a newly indexed Solr core namedstudioin directory<solr-home>/cores/studio2. You can verify this by looking into itscore.propertiesfile:#Written by CorePropertiesLocator #Thu Dec 11 17:26:33 CET 2014 name=studio dataDir=data configSet=content

Reconfigure the new Feeder instance to use the new core under the original name. To this end, the value of property

feeder.solr.urlneeds to be changed accordingly. Start the new Feeder instance.For example, to configure the Content Feeder to feed into the new core which is now available under name

studio, set inWEB-INF/application.properties:feeder.solr.url=http://localhost:44080/solr/studio feeder.solr.collection=studio

If you're using Solr replication, the new index will be replicated automatically to the Solr slaves after a commit was made on the Solr master for the new core. The restart of the Feeder in the previous step caused a Solr commit so that replication should have started automatically. If not, a Solr commit can also be triggered with a request to the following URL, for example in your browser with

http://localhost:44080/solr/studio/update?commit=truefor the Solr core namedstudioon the Solr master running on localhost and port 44080.Note that depending on the index size, replication of the new core may take some seconds up to a few minutes during which the old index is still used when searching from Solr slaves. You can see the progress of replication on the Solr slave's Admin UI on page after selecting the corresponding core.

To clean things up, you can now unload the old Solr core from the Solr master with the [Unload] button on the page of the Solr Admin UI. In the example, this would be the core named

studio2.If you like, you can now also delete the old Feeder installation and the directory of the old Solr core with its index. In this example that would be

<solr-home>/cores/studio

![[Note]](../common/images/note.png) | Note |

|---|---|

You can use HTTP requests to perform the [SWAP] and [UNLOAD] actions instead of using the Solr Admin UI as described above. For details, see the Solr Reference Guide at https://cwiki.apache.org/confluence/display/solr/CoreAdmin+API. |